Summary: The interaction between web2 client-server architectures and web3 systems presents security challenges. Web3 systems often rely on classic centralized components, which can create unique attack surfaces. In this post, ongoing research on the use of web2 components in web3 systems is summarized, including vulnerabilities found in the Dappnode node management framework. The post also discusses a possible root cause for a recent high-profile DeFi security incident involving the Orbit Bridge and highlights the need for holistic security auditing in web3 systems.

Key Points:

* Web2 client-server architectures interacting with web3 systems present unique security challenges.

* Web3 systems often rely on centralized components, creating overlooked attack surfaces.

* Ongoing research has identified vulnerabilities in the Dappnode node management framework.

* A possible root cause for a recent security incident involving the Orbit Bridge is discussed.

* Holistic security auditing is necessary for both decentralized and traditional application components in web3 systems.

The interaction between “web2” client-server architectures (not blockchain) and “web3” systems (blockchain) presents a unique set of security challenges. While web3 promises enhanced security and decentralization, at present, underlying infrastructure supporting web3 systems often leverage classic centralized components such as standard server, cloud, container setups, and web-based APIs. In particular, cross-chain bridges often rely on off-chain components for critical operations such as transaction signing and event relaying, and as such these components present a unique attack surface which is often overlooked.

TL;DR

In this post, we will summarize some ongoing research we have been conducting on the use of web2 components in web3 systems, that led to the identification and prompt mitigation of several web-based attack paths in popular node management framework Dappnode. Read on to learn:

- Various 0-day in a popular node management framework, Dappnode

- How Dappnode can be exploited to gain remote administrative access to Dappnode-based systems.

- Analysis on a possible root cause & attack path behind a recent high-profile DeFi security incident affecting the Orbit Chain bridge, which appears to involve the bridge’s supporting infrastructure.

We will also elaborate on a possible root cause for the January 2024 Orbit Bridge security incident, thought to have been perpetrated by Lazarus Group (APT38) at the time of writing, stemming in part from the interaction between the Orbit validator web API and Orbit bridge router smart contracts. Finally, we highlight the commonalities between our findings and the wider challenges affecting web3 systems and its users.

Proactive Security: Dappnode findings

Dappnode is a popular open-source plug-and-play node solution for the Ethereum ecosystem, allowing users to quickly and easily set up, run or share preconfigured nodes for a variety of L1 and L2 systems. Below are high level technical details for Dappnode:

- Dappnode offers containerized versions of popular node software, referred to as Dappnode packages

- Core Dappnode system components are also containerized in a modular way

- Dappnode uses the InterPlanetary File System (IPFS) to immutably store Dappnode packages, referenced via IPFS Content Identifier (CID) hashes

- Dappnode also uses the Ethereum Naming Service (ENS) for package versioning and naming

- Dappnode provides optimized hardware preconfigured with Dappnode for enhanced support

- A stock Dappnode deployment supports three methods to connect/manage the Dappnode:

- Local network acces

- WiFi: A stock Dappnode deployment can function as a WiFi access point which effectively segments Dappnode services from a wider local network

- VPN: Dappnode supports the Wireguard and OpenVPN standards to allow node operators to remotely access their Dappnode deployment

During an engagement against one of our client’s web3 systems, we identified several issues in the system’s third-party Dappnode dependencies, which are summarized in this post. As NetSPI takes responsible disclosure seriously, prior to the release of this post the vulnerabilities discussed here were shared with the DappNode team. As of DappManager version 0.2.82, the resultant attack paths have been remediated, alongside additional defense in depth improvements being made available over subsequent releases.

These individual issues included:

- Post-authentication remote command execution.

- Pre-authentication cross-site scripting (XSS).

- Pre-authentication local file disclosure.

- Various infrastructure/host-related gaps such as Docker container breakout/local privilege escalation opportunities.

- Lower-risk issues such as permissive cross-origin resource sharing (CORS) policies.

Per the scope of the particular engagement, one of the goals was to demonstrate the practical risk of identified issues from the perspective of a remote, unauthenticated threat actor. By combining these issues, it was possible to build two proof-of-concept single-click exploits, described below.

1-Click Remote Node Takeover

TL;DR

Through a combination of issues, malicious Dappnode content URLs can be created, which provide remote, unauthenticated attackers with persistent back-door administrative access to targeted Dappnode systems when visited by an authenticated Dappnode operator, giving the attacker full control of the underlying host system.

Pre-authentication Reflected Cross-Site Scripting (XSS) via my.dappnode IPFS Proxy

Prior to the security patches, the Dappmanager package provided a proxy for the IPFS gateway, provided by the local IPFS node, allowing IPFS content to be served from the Dappnode management UI via Content Identifier (CID) hashes. IPFS content can be referenced and dynamically served.

The Dappnode IPFS gateway proxy did not sufficiently validate content retrieved via IPFS, allowing for malicious static web pages to be served from the my.dappnode domain. By uploading an XSS payload to IPFS and referencing it via the my.dappnode/ipfs IPFS proxy URL, reflected XSS was possible.

In principle, this was akin to an XSS vulnerability arising from the insecure handling of contents from file uploads or cloud storage. As IPFS was also used for image and icon retrieval, this issue could be exploited against unauthenticated users with network access to the Dappnode.

This issue functioned as an “entry point” into a Dappnode operator’s internal network. As the final URL was indistinguishable from a regular Dappnode IPFS link.

Post-authentication Remote Command Execution (RCE) in privileged Dappmanager container

The Dappmanager package serves as the core component of the Dappnode framework and is responsible for container management and updates. It is managed by the Dappmanager UI, which is intended only to be accessible locally by node operators.

An instance of authenticated remote command injection was found in a management-related API call, which provided command execution in the context of the Dappmanager container.

As the Dappmanager container requires access to the host system’s Docker socket for container management, it was possible to break out of the container and gain an administrative shell on the host by creating a new container with access to the host’s filesystem and network stack.

Using the XSS issue affecting the IPFS component, an XSS payload could have been created which forced a victim’s browser to abuse the command injection vulnerability against their own Dappnode. The end result was the means to remotely compromise a user’s Dappnode after visiting a single link.

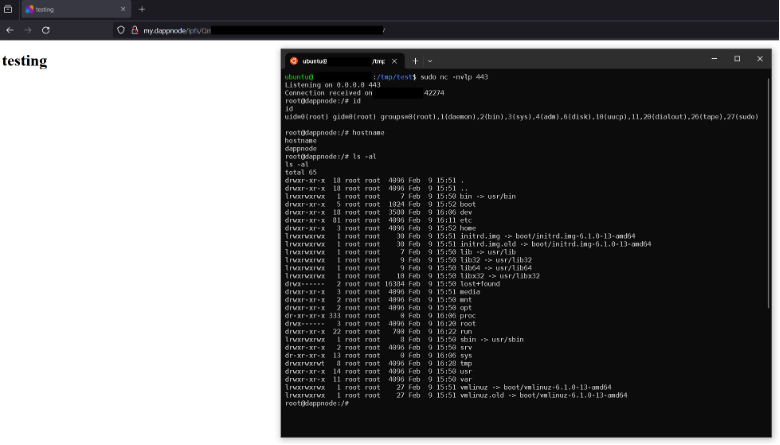

This is shown in the screenshot below, where a reverse TCP shell was executed with root privileges on the host Dappnode system upon the victim visiting the malicious URL:

1-Click Remote VPN Config Exfiltration

Local File Disclosure and Cross-Origin Resource Sharing (CORS) policies in WireGuard API

WireGuard is a connectionless VPN protocol allowing for easy and secure access between clients and server. A WireGuard server will only accept clients with verified public keys.

The Dappnode’s WireGuard package offers a simple API for retrieving WireGuard client configuration files. A local file disclosure issue was identified in this API. While only files with a specific extension could be disclosed via this issue, it still allowed attackers with access to the Dappnode’s network to exfiltrate WireGuard client and server profiles.

Cross-Origin Resource Sharing (CORS) policies

CORS misconfigurations are particularly useful for attackers looking to weaponize XSS issues because a permissive CORS policy effectively nullifies the Same Origin Policy, allowing requesting origins a degree of access over the contents returned from requests that they otherwise would not have.

The WireGuard API was configured with an edge-case CORS misconfiguration that we usually encounter while performing network and application penetration tests, which allowed any requesting origin to view its responses.

Proof-of-concept exploit code was developed to combine these issues along with the IPFS reflected XSS vulnerability. Similarly, malicious URLs may be created which when visited from a victim’s internal Dappnode network, results in their Dappnode client and server WireGuard VPN credentials being exfiltrated to an attacker’s web server, allowing for persistent, anonymous access to the operator’s local Dappnode network, in addition to other VPN-specific attacks.

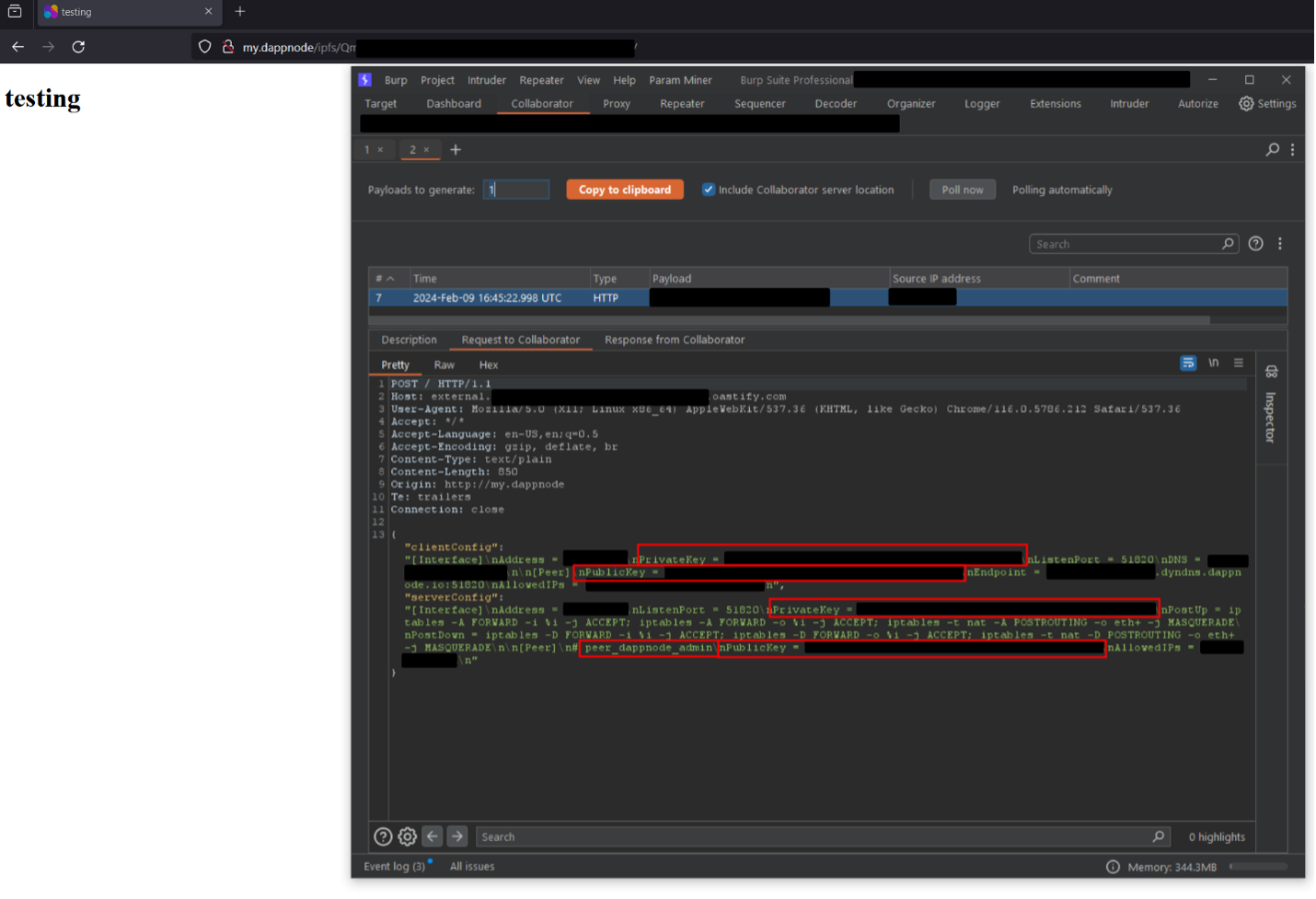

This is shown in a screenshot below, where the node’s VPN profiles were exfiltrated to an external Burp Suite Collaborator domain.

During their own security audits, our web3 partner Blaize has identified similar issues in the traditional application components used by some decentralized systems, such insufficient signature validation and insecure secret storage. A holistic security auditing approach is therefore recommended, encompassing the adversarial-based testing of both decentralized and traditional application components, in addition to security design and architecture reviews.

The concept of bridge security emerges as a critical focal point in the broader discussion of web3 vulnerabilities. Bridges, which facilitate the transfer of assets between different blockchains, represent a vital infrastructure component within the web3 ecosystem. However, they also introduce unique security challenges, as they must securely manage and verify transactions across disparate networks with varying security protocols and assumptions.

Bridge Security Concepts

Ensuring effective security auditing of both on and off-chain aspects of a project or solution is key to preventing breaches. This section will dive into a breakdown of a potential attack vector for the recent Orbit Bridge breach.

Most blockchain frameworks – including base Layer 1 chains such as Ethereum mainnet or Layer 2 scaling solutions such as Arbitrum – are islands unto themselves, with no means to communicate between each other.

To allow blockchain interoperability, cryptocurrency bridges were designed, that act as relay stations which allow information and assets to be exchanged between otherwise incompatible chains. They are essentially accounting books where funds and information is sent through one blockchain are calculated and distributed accordingly on another blockchain.

Bridges are notoriously difficult to secure in part because they are affected by what is referred to as the “Interoperability Trilemma”. It broadly states that bridges may only effectively cater to any two of the three following properties:

- Trustlessness – Like the underlying protocols bridges operate on, this refers to the ability of a bridge to operate without requiring users to place trust in any specific party or intermediary. In a trustless system, security and operations are decentralized and based on cryptographic proofs and consensus mechanisms, removing the need for a central authority.

- Extensibility – An extensible bridge can seamlessly integrate different blockchains, regardless of their underlying architecture or consensus mechanisms.

- Generalizability – A generalizable bridge can interpret different smart contract languages and execution environments, enabling more sophisticated interoperability, like triggering events or functions on one blockchain based on transactions or smart contract states from another. Achieving high levels of generalizability, particularly while limiting opportunities for security issues, is challenging due to the diverse nature of blockchain protocols and smart contract languages.

The Interoperability Trilemma has its roots in the more general Blockchain Trilemma, first outlined by Vitalik Buterin to describe the compromises often made between security, decentralization, and scalability when designing new blockchain protocols.

Although all three facets of the Interoperability Trilemma have inherent security implications, a given bridge’s degree of trustlessness can result in it being classified as either a trusted bridge – a bridge that heavily or totally relies on a central authority, or a trustless bridge, the operations of which are primarily maintained by means of smart contracts and on-chain, decentralized logic.

Even in the case of trustless bridges, the scalability challenges inherent to on-chain computation has resulted in bridge designs which outsource resource intensive, sensitive, or otherwise difficult to implement features of a given bridge to occur off-chain. As is the case for many decentralized applications , trustless bridges can be prone to some degree of centralization.

However, Blaize also notes that bridge engineers are actively tackling the issue of centralization in various ways. One such way, as implemented in the Rainbow Bridge, involves implementing decentralized bridge relayers, wherein key management issues are delegated to each relayer individually. This aims to reduce the reliance on single relayers, as the compromise of one relayer is less likely to lead to the compromise of the overall bridge.

Orbit Bridge – Pivoting from off-chain to on-chain

The Orbit Bridge is a cross-chain protocol built on the Orbit Chain. It was designed to allow for cross-chain asset transfers between layer 1 and layer 2 chains, including Ethereum, Ripple, and Arbitrum. On terminal ends of the bridge on each chain are “Vault” contracts, which held a bridge user’s funds. For a user to withdraw funds on behalf of the bridge, the withdrawal transaction is required to be signed by signed by a minimum number of off-chain bridge validators.

As mentioned earlier, in the early hours of January 1 2024, a high-profile exploit occurred against the Orbit Bridge, resulting in approximately $81.5 million worth of various tokens being stolen. The bridge was subsequently disabled by Ozys, the company behind the bridge’s development. The bridge remains offline as of writing.

The specific root cause of the incident has yet to be publicly released as of writing, with a January 2024 official statement from Orbit Chain reiterating that the attack path is not yet fully clear.

We conducted research into the incident, and a possible attack path was identified. In keeping with the theme of this post, this potential attack path involves the abuse of certain web-based bridge validator APIs, in conjunction with a design flaw in the on-chain transaction validation process. This possible attack path is discussed below.

Note that this is only a possible attack path, and it has in no way been validated for accuracy by Orbit Chain, Ozys, or any affiliated party. These are only inferences made against open-source codebases and publicly available documentation, and further investigatory efforts are likely required before a definitive root cause can be attributed.

Additionally, as the Orbit Bridge RPC endpoints were taken offline following the incident and are not available as of writing, it is not possible to definitively confirm this attack path as of writing. As such, some level of educated conjecture may be evident during the research.

On-chain component analysis

The issues in the affected smart contracts became clear shortly after the incident, evident from the EthVault contract’s withdraw and _validate functions as seen in their implementation below. Vaults on other supported chains contained similar logic:

///@param bytes32s [0]:govId, [1]:txHash

///@param uints [0]:amount, [1]:decimals

function withdraw(

address hubContract,

string memory fromChain,

bytes memory fromAddr,

bytes memory toAddr,

bytes memory token,

bytes32[] memory bytes32s,

uint[] memory uints,

uint8[] memory v,

bytes32[] memory r,

bytes32[] memory s

) public onlyActivated {

require(bytes32s.length >= 1);

require(bytes32s[0] == sha256(abi.encodePacked(hubContract, chain, address(this))));

require(uints.length >= 2);

require(isValidChain[getChainId(fromChain)]);

bytes32 whash = sha256(abi.encodePacked(hubContract, fromChain, chain, fromAddr, toAddr, token, bytes32s, uints));

require(!isUsedWithdrawal[whash]);

isUsedWithdrawal[whash] = true;

uint validatorCount = _validate(whash, v, r, s);

require(validatorCount >= required);

address payable _toAddr = bytesToAddress(toAddr);

address tokenAddress = bytesToAddress(token);

if(tokenAddress == address(0)){

if(!_toAddr.send(uints[0])) revert();

}else{

if(tokenAddress == tetherAddress){

TIERC20(tokenAddress).transfer(_toAddr, uints[0]);

}

else{

if(!IERC20(tokenAddress).transfer(_toAddr, uints[0])) revert();

}

}

emit Withdraw(hubContract, fromChain, chain, fromAddr, toAddr, token, bytes32s, uints);

}

…

function _validate(bytes32 whash, uint8[] memory v, bytes32[] memory r, bytes32[] memory s) private view returns(uint){

uint validatorCount = 0;

address[] memory vaList = new address[](owners.length);

uint i=0;

uint j=0;

for(i; i<v.length; i++){

address va = ecrecover(whash,v[i],r[i],s[i]);

if(isOwner[va]){

for(j=0; j<validatorCount; j++){

require(vaList[j] != va);

}

vaList[validatorCount] = va;

validatorCount += 1;

}

}

return validatorCount;

} A call to the withdraw function requires details for the transaction (source chain, sender/recipient/token addresses, amount, etc.), and signature verification variables (V, R, S) derived from a validator’s signature. For a more detailed understanding of these values, refer to the Ethereum Yellow Paper, however for this article, we can treat these values as the actual signature.

The main issue with the Vault contracts was that the withdraw functions solely relied on provided withdrawal transaction hashes being signed by a certain number of validators, and not the actual transaction details themselves.

As long as the Vault contracts registered that at least the required number of validators had signed a transaction hash (7 at the time of the incident), the withdrawal from any account providing the V/R/S signature values and transaction hash would be processed, provided that the specific transaction hash has not been used before. The attacker abused this to execute several withdrawal requests, mainly against the Vault on Ethereum mainnet, for repeated amounts of ETH, wBTC, USDT, USDC, and DAI.

While the contracts certainly should have validated that transaction arguments correspond to provided signature structures, this incident would not have been possible without the attacker gaining access to valid transaction signatures from validators, prior to the signatures being included in the Vault’s isUsedWithdrawal mapping.

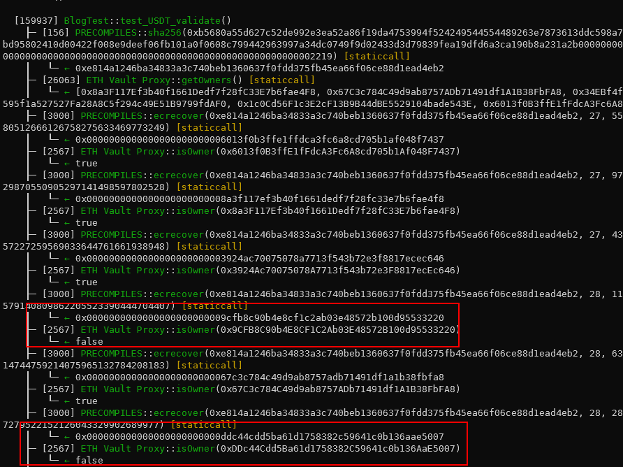

A known issue highlighted in an April 2022 security audit of the Vault contracts by security firm Theori highlighted a potential signature replay issue affecting the same function, however no means to “fake” a signature were identified in the contracts or the attacking address’s on-chain activity in the time leading up to the incident. Furthermore, signatures provided by the attacker can be confirmed to originate of Orbit Bridge validators via the following Forge proof-of-concept test suite.

For these reasons, it was initially speculated that the private keys for 7 required validators were somehow compromised, and that the attackers used them to sign their own transaction hashes. However, the official statement from Ozys implied that following investigations, it was assumed that neither a specific smart contract vulnerability, nor an outright private key compromise were to blame for the incident.

Note however that some of the attacker’s transactions, such as the theft of 30 million USDT, also contained signature values for addresses which are not included in the EthVault’s isOwner mapping, in addition to the required number of validator signatures. This is seen in the proof-of-concept output below.

Possible reasons for the inclusion of these additional signatures from non-validator addresses in the exploit transaction include re-use of the attacker’s tools used to generate the signature, which may have referenced additional addresses meant for use against vault contracts on chains aside from Ethereum mainnet.

Off-chain component analysis

If private keys were not compromised, it would follow that there may have been exploitable flaws in the signature generation process, which ultimately allowed an attacker to sign arbitrary transaction data on behalf of validators.

The Orbit Bridge documentation offers some clues as to where such a flaw may originate. The documentation describes the off-chain validators, and instructions to deploy a validator in AWS. The process for being formally vetted as a bridge validator is also referenced.

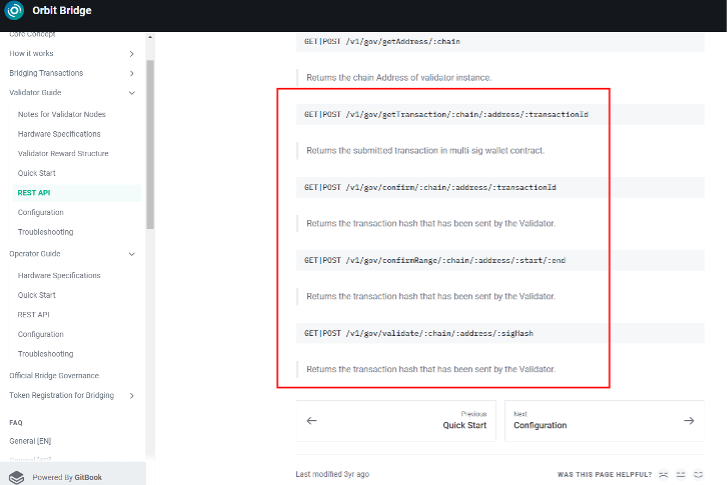

Web API endpoints for confirming and validating transaction hashes are referenced in the documentation. However, the documentation generally lacks specific details about the validation process:

The Orbit Chain GitHub organization includes the bridge-dockerize repository, a containerized version of the validator codebase for deployment in AWS. The bridge-contract repository also contains versions of the Vault contracts for supported chain, including the Vault for the Orbit Chain itself.

The validator codebase references these API routes:

bridge-dockerize/routes/v1/gov.js:

…

router.get("/getTransaction/:chain/:migAddr/:transactionId", async function (req, res, next) {

…

return res.json(await govInstance.getTransaction(chain, mig, tid));

})

router.get("/confirm/:chain/:migAddr/:transactionId/:gasPrice/:chainId", async function (req, res, next) {

…

return res.json(await govInstance.confirmTransaction(chain, mig, tid, gasPrice, chainId));

})

…

router.get("/validate/:migAddr/:sigHash", async function (req, res, next) {

const mig = req.body && req.body.migAddr || req.params && req.params.migAddr;

const sigHash = req.body && req.body.sigHash || req.params && req.params.sigHash;

return res.json(await govInstance.validateSigHash(mig, sigHash));

});

… Notably, the validator itself does not implement any kind of access control for the APIs. Deploying the validator container exposes the API on port 17090 of the host system via Docker’s default bridge network driver. It is assumed however that the validator API is not intended to be exposed publicly, and that the docker image is intended to be restricted to an internal network.

The validator’s validateSigHash function, called by visiting the /validator/ route, is shown below. The function takes two arguments, an address of the Vault contract multisig, and transaction hash sigHash:

bridge-dockerize/src/evm/index.js:

…

async validateSigHash(multisig, sigHash) {

if(this.chainName !== "ORBIT") return "Invalid Chain";

if(multisig.length !== 42 || sigHash.length !== 66) return "Invalid Input";

const orbitHub = instances.hub.getOrbitHub();

const validator = {address: this.account.address, pk: this.account.pk};

let mig = new orbitHub.web3.eth.Contract(this.multisigABI, multisig);

… However, arguments are directly taken from the /validator URL, and are only verified for length. The expected sigHash length of 66 matches the length of the SHA256 transaction hashes (“0x” + 64 bytes) generated in the Vault contracts.

The multiSig contract address is then used to reference a contract object mig, using an ABI which closely matches the previously shown Vault contracts. Therefore, the ABI is essentially used as an interface for the address, which may be any contract on the Orbit Chain network which sufficiently implements the defined functions.

bridge-dockerize/src/evm/index.js:

…

let confirmedList = await mig.methods.getHashValidators(sigHash).call().catch(e => {return;});

if(!confirmedList) return "GetHashValidators Error";

let myConfirmation = !!(confirmedList.find(va => va.toLowerCase() === validator.address.toLowerCase()));

let required = await mig.methods.required().call().catch(e => {return;});

if(!required) return "GetRequired Error";

if(myConfirmation || parseInt(required) === parseInt(confirmedList.length))

return "Already Confirmed"

…Various Vault contract functions are then called using the mig contract reference. Notice however that the actual values returned by the contract are also not subject to stringent verification. This is possibly because the developers assumed that invalid contract calls would revert on-chain, and would be caught by default web3.js library error handling.

In any case, because any Orbit Chain contract address can be specified, it would be possible to return any value necessary to satisfy these checks, such as the myConfirmation check on line 841, which only checks that a validator address is included in the return values from the Vault/Multisig contract’s getHashValidators function.

The validator’s private key is then used to sign the sigHash argument, before the resulting signature’s V,R, and S values are formatted in an array named params:

bridge-dockerize/src/evm/index.js:

…

let sender = Britto.getRandomPkAddress();

if(!sender || !sender.pk || !sender.address){

return "Cannot Generate account";

}

let signature = Britto.signMessage(sigHash, validator.pk);

let params = [

validator.address,

sigHash,

signature.v,

signature.r,

signature.s,

]

let txData = {

from: sender.address,

to: multisig,

value: orbitHub.web3.utils.toHex(0)

}

… The remainder of the validateSigHash function is shown below. On line 873 of the validator codebase, the param array including the signature values were used as arguments to the validate function in the Orbit Chain’s OrbitVault contract, before the OrbitHub contract is used to broadcast a signed transaction using the data returned from the OrbitVault contract via web3.js.

bridge-dockerize/src/evm/index.js:

…

let gasLimit = await mig.methods.validate(...params).estimateGas(txData).catch(e => {return;});

if(!gasLimit) return "EstimateGas Error";

let data = mig.methods.validate(...params).encodeABI();

if(!data) return "EncodeABI Error";

txData.data = data;

txData.gasLimit = orbitHub.web3.utils.toHex(FIX_GAS);

txData.gasPrice = orbitHub.web3.utils.toHex(0);

txData.nonce = orbitHub.web3.utils.toHex(0);

let signedTx = await orbitHub.web3.eth.accounts.signTransaction(txData, "0x"+sender.pk.toString('hex'));

let tx = await orbitHub.web3.eth.sendSignedTransaction(signedTx.rawTransaction).catch(e => {console.log(e)});

if(!tx) return "SendTransaction Error";

return tx.transactionHash;

}

… Note that only the Orbit Chain’s own Vault contracts on bridge destination chains implemented such a public validate function, and Vault implementations on other chains did not.

Below is the public validate function, inherited by the OrbitVault implementation contract from the MessageMultiSigWallet contract.

…

contract OrbitVaultStorage {

…

mapping (bytes32 => bool) public validatedHashs;

mapping (uint => bytes32) public hashs;

uint public hashCount = 0;

mapping (bytes32 => uint) public validateCount;

mapping (bytes32 => mapping(uint => uint8)) public vSigs;

mapping (bytes32 => mapping(uint => bytes32)) public rSigs;

mapping (bytes32 => mapping(uint => bytes32)) public sSigs;

mapping (bytes32 => mapping(uint => address)) public hashValidators;

… Therefore, calling the /validate API endpoint with any 64-byte hexadecimal string appears to publicly expose the V/R/S values of the string’s signature via the OrbitVault contract on Orbit Chain. Crucially, the Orbit Chain Vault was also the only contract to publicly expose V/R/S values via getters. Other Vault/Multisig contract implementations did not include these mappings in storage.

Recall that transaction details submitted to the Vaults withdraw functions are used to generate a SHA256 whash transaction hash. This means that should an attacker be able to call this API with a transaction hash derived from arbitrary transaction details, they would effectively be able to expose the bridge validator’s signature via the OrbitVault contract , or possibly their own malicious contract on the Orbit Chain, before the transaction has been executed.

Finally, another potential attack vector could be within the dockerized validator’s, where the private key is defined in the following example configuration file:

bridge-dockerize/.example.env:

VALIDATOR_PK= # EXPANDED NODE RPC ex) infura, alchemy, etc... # Type of value must be an array. # ex) ["https://mainnet.infura.io/v3/[PROJECT_ID]", "https://eth-mainnet.g.alchemy.com/v2/[PROJECT_ID]"] AVAX=[] BSC=[] CELO=[] ETH=[] FANTOM=[] HARMONY=[] HECO=[] KLAYTN=[] MATIC=[] XDAI=[] #KAS CREDENTIAL KAS_ACCESS_KEY_ID= KAS_SECRET_ACCESS_KEY= #TON TON_API_KEY= …

Testing showed that the private key was expected in plaintext format. More interestingly though, it appears that the same validator private key is used to sign transactions for all supported chains. This likely means that signatures generated on the Orbit Chain will be valid on all supported chains, barring nonstandard configurations such as the validator EOA addresses on certain chains being abstracted accounts.

Therefore, it would be possible for an attacker with network access to the validator API to execute a kind of cross-chain signature “replay” attack, using values from the OrbitVault contract (on the Orbit Chain network) public vSig/rSig/sSig mappings as the signature to be used in a malicious call to the EthVault contract on Ethereum mainnet.

The possible attack path can therefore be summarized as follows:

- Attackers identify at least 7 Bridge validator deployment (or validator deployments configured with the private key for up to 7 approved validator addresses) for validator EOA accounts marked as owners/validators in the target Vault contracts.

- Attackers gain access to the validator APIs, possibly abusing weakened ingress network controls on Ozys’ internal/AWS networks.

- Attackers generate their own transaction hashes in the same format used by the Vault contracts, which include details such as amount, toAddress, fromAddress, etc., and call the validator API endpoints with this transaction hash and the address of the OrbitVault contract, deployed on the Orbit Chain network.

- This causes the API to post the V/R/S signature values for the attacker’s transaction hash to the OrbitVault contract, which exposes these values in the contract’s public vSig, rSig, and sSig mappings.

- The same validator addresses are used across supported chains, so attackers may simply query the OrbitVault contract’s public getter functions to get the signature values, and use them to call the withdraw functions on the supported chain’s Vault contracts to execute their signed transactions.

Counterpoints

The following potential outliers cast doubt onto this theory:

- Different node codebase: The validator codebase in the bridge-dockerize public repository may not be the exact codebase used by actual Orbit Bridge validators at the time of the attack.

- The Orbit Bridge documentation does not suggest this however, as it makes several references to the public repository.

- Abstracted validator accounts: Similarly, if a different validator codebase which abstracted validator addresses was in use at the time of the attack, then an abstracted validator’s address may differ between chains.

- However, no evidence of account abstraction was observed in the validator codebase or affected contracts.

- Specific transaction amounts: In theory, nothing would have stopped the attackers from generating a transaction hash for the entire EthVault contract’s balance, allowing them to steal an affected Vault contract’s balance in one transaction. However, for certain transactions, the attackers were seen to withdraw specific, repeating amounts from the vault contracts instead, particularly for USDT transactions.

- This may be because the attacker’s did not have direct access to the validator API, and instead accessed the API via a compromised intermediate downstream system (e.g., the bridge UI, some middleware API, etc). which only allowed specific amounts of a given token to be included in a hash value before it was signed.

Possible initial access methods

As for how the attackers got into a position to call the validator APIs for the required number of validator instances, the January 25, 2024, statement from Ozys describes the following details leading up to the incident:

- November 20, 2023: Ozys’ then CISO issues a voluntary retirement decision.

- November 22, 2023:

- Ozys’ then CISO had “arbitrarily changed firewall policies”.

- An information security specialist at Ozys then also “abruptly made the firewall vulnerable”.

- December 6, 2023: The information security specialist left the company.

Not much is known about the bridge’s governance structure, or where the validators were hosted. However, if the details in the statement are indeed related to the incident, is possible that some means to access all validator instances existed internally in Ozys’ internal network, and that the attackers took advantage of the lax egress network controls to identify and abuse these means to interact with the validator APIs.

A less likely scenario is that Ozys’ themselves maintained a relatively large pool of validators, which were deployed in (or otherwise accessible from) Ozys’ internal network. However, as each transaction executed by the attackers appeared to use signatured generated by different sets of validators, and as such this scenario would only be possible if a large majority of validators were under Ozys’ direct control.

If the firewall issues were in fact incidental, then several other possibilities exist:

- The attackers may have instead independently identified the locations of the bridge validators and targeted the AWS environments of individual DAO participants.

- A server-side application security flaw (e.g., SSRF) in the bridge front-end or a similar dApp may have allowed attackers to target the validator APIs through downstream components.

- A client side attack against individual validator operators.

Wider Implications

Our research into the integration of web2 systems within the web3 environment reveals a complex landscape of security challenges. The findings point to a broader trend in the emerging web3 landscape: the persistence of web2 security issues in new, decentralized contexts.

As the industry moves forward, it’s imperative to apply lessons learned from decades securing traditional system architectures to strengthen the resilience of web3 systems. This involves not only patching known vulnerabilities but also adopting a proactive approach to security, anticipating how attackers might exploit the interconnected nature of modern digital infrastructures.

References

Source: Original Post