Summary: Researchers at Cisco and Robust Intelligence tested various AI models, including DeepSeek, for susceptibility to jailbreaking, revealing DeepSeek’s 100% success rate in attacks. The study involved multiple AI models and highlighted significant security flaws in DeepSeek compared to its competitors. This vulnerability raises concerns regarding the model’s safety mechanisms and potential misuse.

Affected: Cisco, Robust Intelligence, University of Pennsylvania, DeepSeek, AI Models

Keypoints :

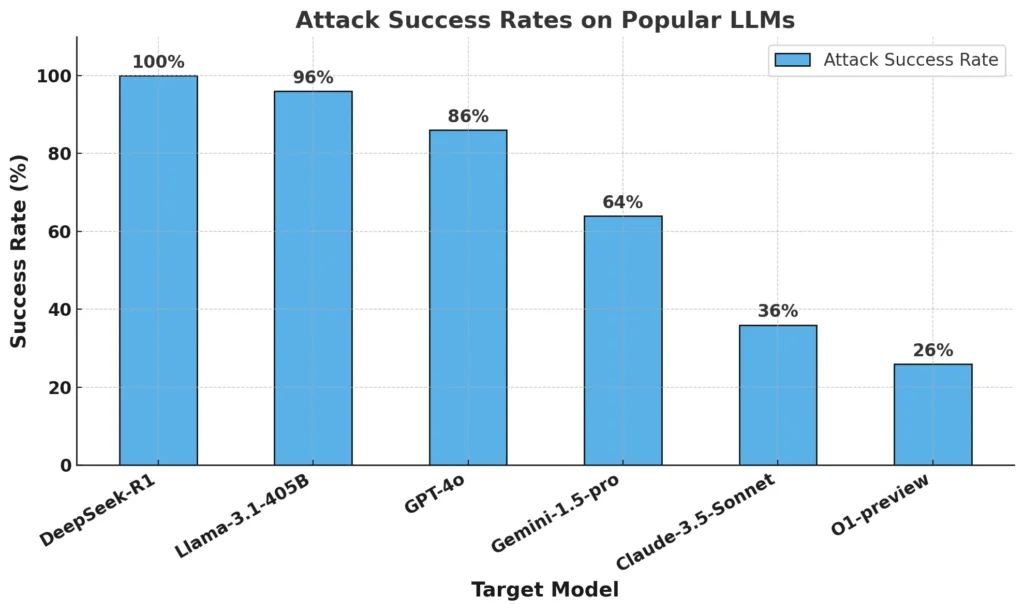

- DeepSeek showed a 100% attack success rate during jailbreaking tests, significantly higher than OpenAI’s o1 at 26%.

- The analysis used the HarmBench benchmark to evaluate susceptibility across seven harmful categories.

- DeepSeek’s training methods may have compromised its security, lacking robust guardrails compared to other advanced models.

- Researchers were able to expose DeepSeek’s full system prompt, which has since been patched.

- Concerns have emerged globally, leading to actions like bans on DeepSeek for government devices in Texas and access blocks in Italy.

Source: https://www.securityweek.com/deepseek-compared-to-chatgpt-gemini-in-ai-jailbreak-test/