Summary: Attackers are exploiting vulnerabilities in popular open-source AI model repositories like Hugging Face to post malicious projects, raising critical concerns for companies using these resources. Recent findings indicate that even automated security checks can miss sophisticated threats, particularly those using the insecure Pickle data format. There is a pressing need for businesses to implement robust security measures and to understand the risks associated with AI models.

Affected: Hugging Face and other open-source AI model repositories

Keypoints :

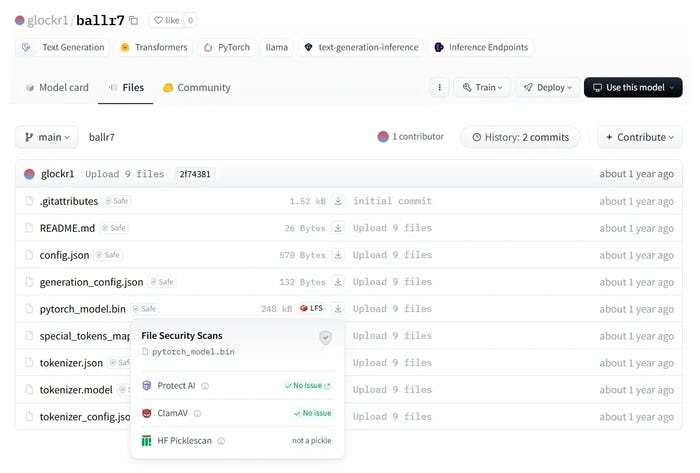

- Attackers use techniques like “NullifAI” to bypass security checks on AI models hosted in repositories.

- The commonly used Pickle file format is vulnerable and can allow arbitrary code execution, prompting calls for a shift to safer alternatives like Safetensors.

- Companies must understand licensing complexities and potential alignment issues associated with AI models to mitigate security risks effectively.

Views: 5