The article discusses the rising security threats associated with the use of Pickle files in machine learning models hosted on platforms like Hugging Face. Researchers from ReversingLabs discovered malicious code embedded in certain models that were not flagged as unsafe. This illustrates the vulnerabilities in current security measures against unsafe data serialization formats, prompting a need for better protective protocols. Affected: Hugging Face, machine learning models, software supply chain security

Keypoints :

- Rapid growth in AI usage has led to increased attention from threat actors.

- Hugging Face is a popular platform for sharing machine learning models.

- Pickle is a commonly used module for serializing ML model data but poses serious security risks.

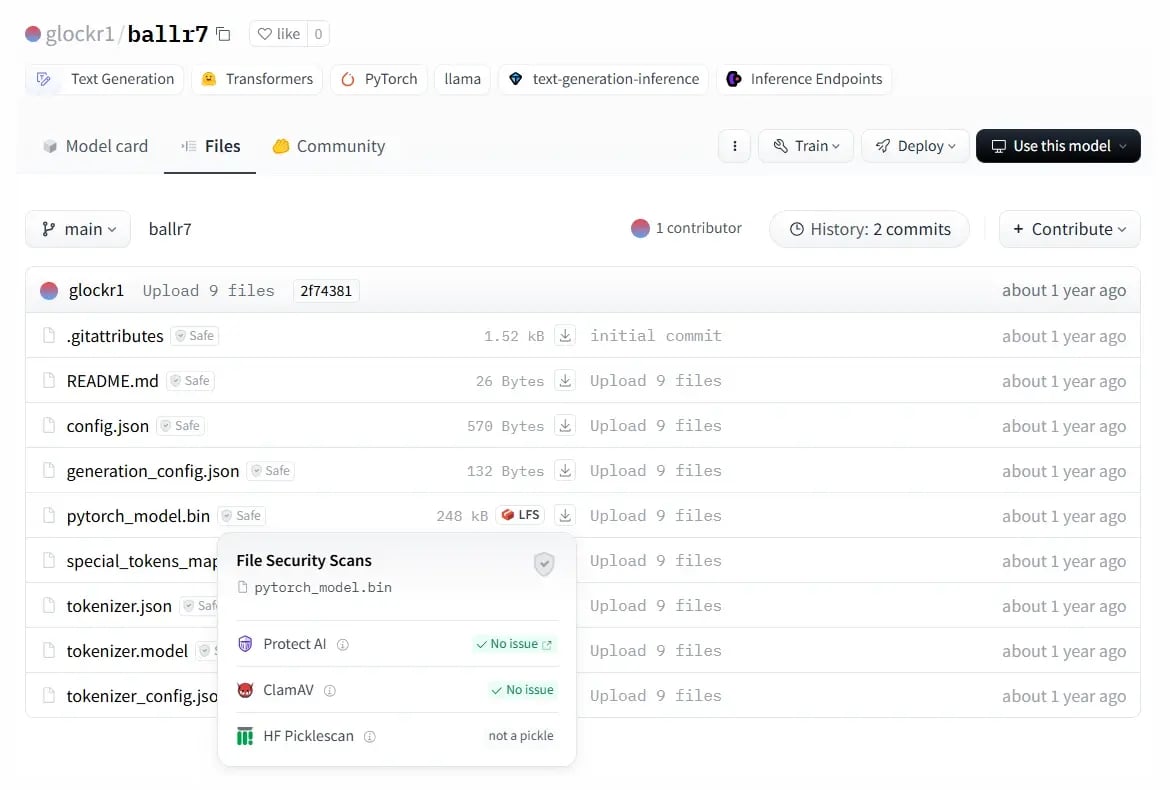

- Recent research revealed malicious code in Hugging Face models using Pickle serialization.

- The technique, named “nullifAI,” evades existing security measures.

- Malicious models were found using a non-standard compression method which bypassed detection.

- Picklescan tool exhibited shortcomings in detecting unsafe Pickle files.

- Hugging Face responded by removing malicious models within 24 hours.

- Recommendations for improved security measures are outlined for using Pickle files.

MITRE Techniques :

- T1047: Windows Management Instrumentation – The malicious payload executed reverse shell commands.

- T1203: Exploitation for Client Execution – Using Pickle files to execute malicious code upon deserialization.

Indicator of Compromise :

- [SHA1] 1733506c584dd6801accf7f58dc92a4a1285db1f

- [SHA1] 79601f536b1b351c695507bf37236139f42201b0

- [SHA1] 0dcc38fc90eca38810805bb03b9f6bb44945bbc0

- [SHA1] 85c898c5db096635a21a9e8b5be0a58648205b47

- [IP Address] 107.173.7.141

Full Story: https://www.reversinglabs.com/blog/rl-identifies-malware-ml-model-hosted-on-hugging-face