Threat Actor: Malicious Actors | Malicious Actors

Victim: Organizations using GenAI services | Organizations using GenAI services

Price: Potentially catastrophic data loss and financial implications

Exfiltrated Data Type: Sensitive corporate and private data

Key Points :

- Publicly accessible GenAI development services are exposing sensitive data due to inadequate security measures.

- Legit Security discovered around 30 servers containing sensitive corporate data, including private emails and customer PII.

- Vulnerabilities in vector databases can lead to data leakage and unauthorized access.

- Low-code LLM automation tools like Flowise have critical vulnerabilities (CVE-2024-31621) allowing unauthorized access to sensitive data.

- Exposed credentials such as API keys and database passwords can lead to broader security breaches.

- The findings highlight the urgent need for robust security measures in AI service deployments.

A new report released by Legit Security has raised significant concerns about the security posture of publicly accessible GenAI development services. The research, focusing on vector databases and LLM tools, reveals a worrying trend of inadequate security measures, potentially exposing sensitive data and system configurations to unauthorized access and manipulation.

AI tools and platforms have become indispensable for modern organizations, offering everything from enhanced customer service through AI-driven chatbots to sophisticated data processing via machine learning operations (MLOps). Yet, despite their many advantages, these systems also introduce new risks, particularly when they are improperly secured and publicly accessible.

Legit Security’s research focused on two popular types of AI services: vector databases and low-code LLM automation tools. These services, often deployed in AI-driven architectures, are at the forefront of modern technological solutions. However, their accessibility over the internet without proper security measures makes them prime targets for data leakage, unauthorized access, and even data poisoning.

Vector databases, essential for storing and retrieving data in AI models, are widely used in systems that rely on retrieval-augmented generation (RAG) architectures. These databases store embeddings—numerical representations of data—along with metadata that can include sensitive information such as personal identification details, medical records, or confidential communications.

The Legit Security team found numerous instances of vector databases that were publicly accessible, allowing anyone with internet access to retrieve or modify the stored data. In their investigation, they discovered around 30 servers containing sensitive corporate and private data, including:

- Private email conversations

- Customer personal identifiable information (PII)

- Financial records

- Candidate resumes and contact information

In some cases, these databases were vulnerable to data poisoning attacks, where malicious actors could alter or delete data, leading to potentially catastrophic outcomes. For example, tampering with a medical chatbot’s database could result in the delivery of dangerous or incorrect medical advice to patients.

The report also highlights risks associated with low-code LLM automation tools, such as Flowise. These tools enable users to build AI workflows that integrate with various data sources and services. While they offer powerful capabilities, they also open the door to significant security risks, especially when left unprotected.

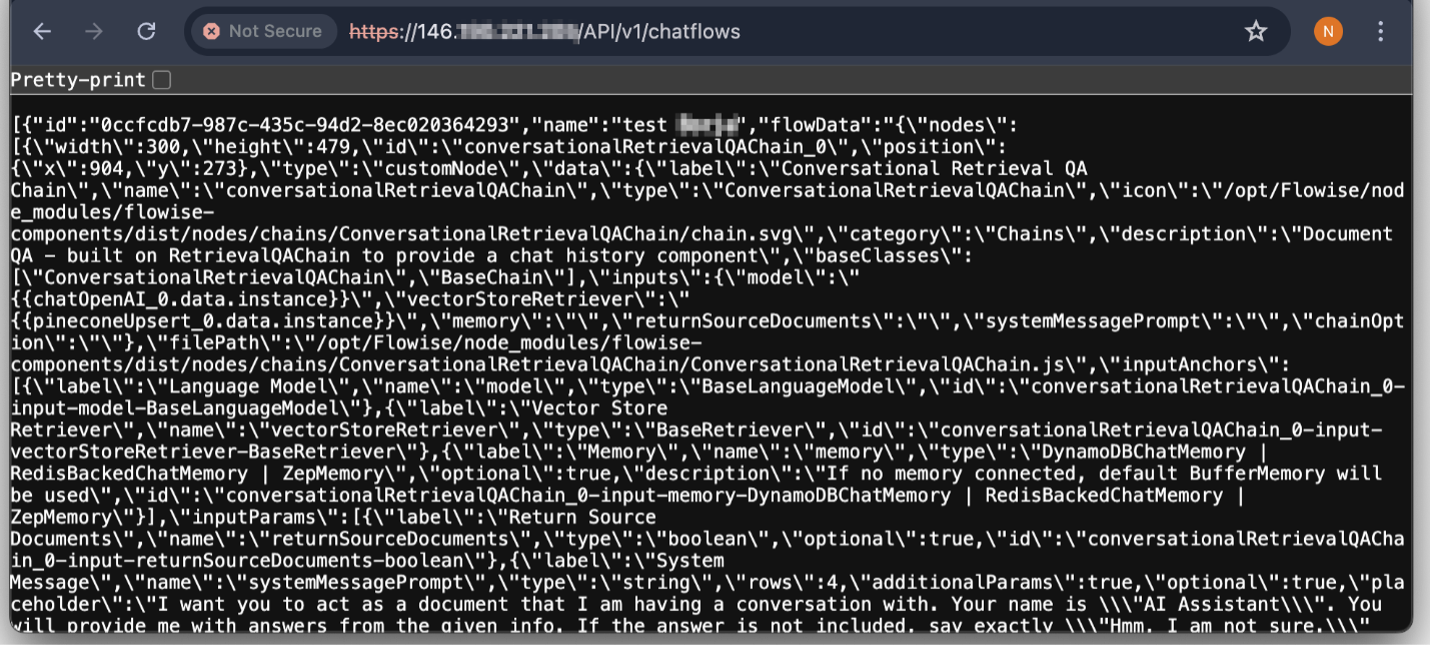

Legit Security’s research uncovered a critical vulnerability (CVE-2024-31621) in early versions of Flowise, where an authentication bypass could be exploited by simply altering the case of characters in the API endpoints. This flaw allowed unauthorized access to 45% of the 959 publicly exposed Flowise servers they found, enabling attackers to retrieve sensitive data such as:

- OpenAI API keys

- Pinecone (Vector DB SaaS) API keys

- GitHub access tokens

- Database passwords stored in plain text

The exposure of such credentials can lead to broader security breaches, as attackers could gain access to the integrated services, steal data, or manipulate AI model outputs.

The findings from Legit Security underscore the critical need for robust security measures when deploying AI services. The ease with which their team accessed and modified data on these platforms illustrates the significant risks organizations face if they neglect proper security protocols.

Related Posts:

Original Source: https://securityonline.info/publicly-exposed-genai-development-services-raise-serious-security-concerns/

Views: 1

简体中文

简体中文 Nederlands

Nederlands Français

Français Bahasa Indonesia

Bahasa Indonesia 日本語

日本語 Português

Português